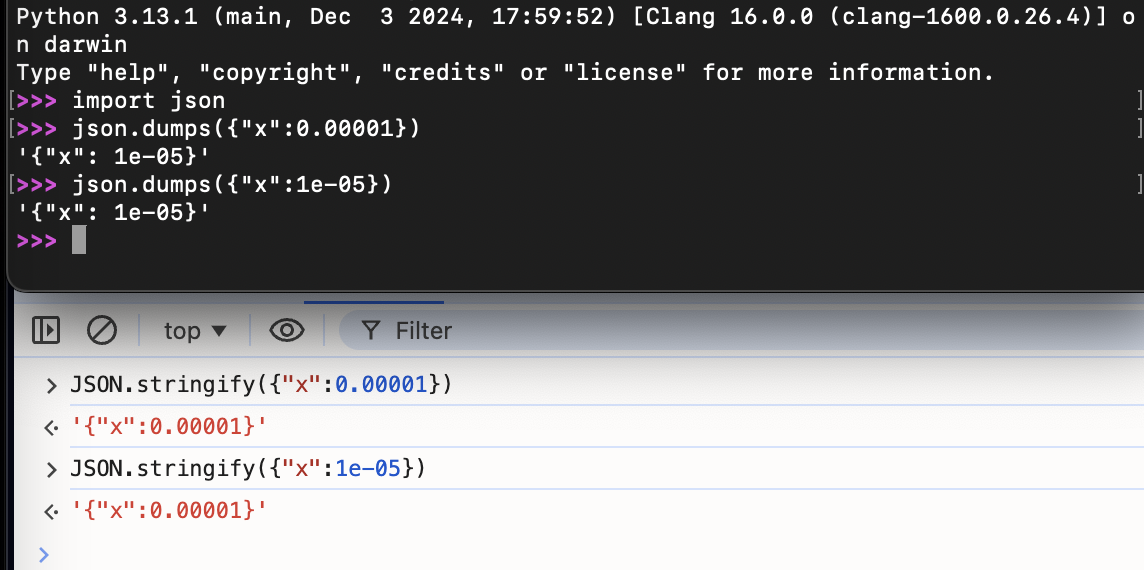

whats wrong? they’re the same value no?

I think they’re pointing out that Python outputs E notation vs JavaScript which outputs the decimal notation.

Edit: Wasn’t agreeing with it, just explaining what they were pointing out.

Yeah, but that… doesn’t really matter. So it doesn’t really make sense to post that here, especially with that headline.

Would this cause a problem? I’m assuming this would be deserialized to the same value, no?

But that’s a stringify method, tho.

JS passes a float to the console. Console prints the float however it wants to.Just do strict comparison when you want to compare a variable to

1e-5.

Cause a string of0.00001should be passed throughparseFloat(or whatever your language equivalent is) before you compare it to a variable with the valuef0.00001

Both of these are valid notation. Json spec also says that numbers are floats, so it’s perfectly acceptable to denote them this way. This is why currency should never use a number notation, and instead use a string. If it must be precise, use a string.

Or, use the smallest denomination. In the US, that’s typically tenths of a penny. So, $1 = 1000. Then everything uses integer arithmetic.

Of course, JS doesn’t actually have integers, so yeah, strings are probably best.

But the second you go international that goes out the window. There are currencies with 3 and 4 digits of precision. There are currencies that are integers. Keeping track of that is a nightmare using a numerical value. It’s safest just to represent it as a string.

Every currency has a smallest denomination.

Right but then you have to pass that information around so people know how to deserialize it, and it means things like the UI need to do exact currency conversions on their side that must match the server too. So if you are doing USD you would not only need to pass 1000 to denote $10 but also the currency name, USD, and it’s precision value of 2. However if you are using the Dinar, and they pass the same 1000 they would need to make sure they pass the precision of 3, and the UI would need to calculate that. (And remember JS is floats, so you run the risk there that the value may not be the same as what the server would see)

The best course of action is to format it on the server. I’ve found that passing the currency code is good along with the stringified value of “10.00” or in the Dinar case “1.000”. That way the UI knows exactly what it should show. Its also extremely rare that someone needs to modify a value on the frontend, but if they do most currency libraries prefer string anyway.

Source: I’ve done FinTech for trading companies, banks, and payment processors. There are a lot of gotchas with money

If you’re passing a string, and you don’t know what currency it is, you have the exact same problem as passing an int and not knowing what currency it is. USD’s smallest denomination is 1/10 cent (gas stations usually charge in tenths of a cent, and half pennies are still legal tender, even though they’re not minted anymore), so the string representation in your examples would be exactly the same for USD and Dinar.

I agree that the best way to represent it between server and client is as a string, but that doesn’t work when you need to perform calculations, so in that case, the best way to do the calculations is to use the smallest denomination, then use banker’s rounding on the result, then use the int for storage and turn it back into a string representing the default denomination for transit. Or, just use ints representing the smallest denomination everywhere except displaying to the user. Even JavaScript can handle integer arithmetic.

Source: I’ve also done fintech for loan and retail companies. Yes, there are definitely a lot of gotchas, so using integers is best when you need to do calculations.

Counter point… Both are generating perfectly valid JSON, so who cares?

Python 3.13.2 (main, Feb 5 2025, 08:05:21) [GCC 14.2.1 20250128] Type 'copyright', 'credits' or 'license' for more information IPython 9.0.2 -- An enhanced Interactive Python. Type '?' for help. Tip: IPython 9.0+ have hooks to integrate AI/LLM completions. In [1]: import json In [2]: json.loads('{"x": 1e-05}') Out[2]: {'x': 1e-05} In [3]: json.loads('{"x":0.00001}') Out[3]: {'x': 1e-05}Welcome to Node.js v20.3.1. Type ".help" for more information. > JSON.parse('{"x":0.00001}') { x: 0.00001 } > JSON.parse('{"x": 1e-05}') { x: 0.00001 }Javascript and Python both happily accept either format from the string and convert it into a float they are happy with.

The value after the

:isn’t in double/single quotes, so it is a literal value. Thus, a float value will be parsed as a float. Whether it is 1E-5 or 0.00001. They are numerically equivalent, but not stringly equivalent.If you are having errors parsing your JSON, then use a proper JSON library instead of trying to roll your own.

Oh god, this brings back memories of setting up a big query project and running into issues with JSON strict parsing and all our fixed decimal money types.

That sounds like you ran into problems when deserializing a number value from JSON, which then got slightly changed due to floating point shenanigans. That’s technically not JSON’s fault. JSON numbers aren’t IEEE754. They’re just numbers. It’s only the deserializers that usually choose to represent JSON numbers as floating point values.

Quoting https://www.rfc-editor.org/rfc/rfc8259#page-7

A number is represented in base 10 using decimal digits. It contains an integer component that may be prefixed with an optional minus sign, which may be followed by a fraction part and/or an exponent part. […]

A fraction part is a decimal point followed by one or more digits.

Numeric values that cannot be represented in the grammar below (such as Infinity and NaN) are not permitted.

This specification allows implementations to set limits on the range and precision of numbers accepted. Since software that implements IEEE 754 binary64 (double precision) numbers [IEEE754] is generally available and widely used, good interoperability can be achieved by implementations that expect no more precision or range than these provide, in the sense that implementations will approximate JSON numbers within the expected precision. A JSON number such as 1E400 or 3.141592653589793238462643383279 may indicate potential interoperability problems, since it suggests that the software that created it expects receiving software to have greater capabilities for numeric magnitude and precision than is widely available.

Yeah, the problem wasn’t that there were json numbers, it was that they were being parsed and deserialized wrong. And boy, were they being parsed and deserialized wrong in a lot of places.

It’s just one extra arg in the parsing method to fix it, just a pain in the ass rebuilding half a data warehouse to fix the existing data.