Just some humble guys who pooled their money to buy it for $100,000 and then renovate it for $3.3mil.

Just some humble guys who pooled their money to buy it for $100,000 and then renovate it for $3.3mil.

Thelen brought a jar of lithium iron phosphate to the podium. Grim-faced and wearing a navy blue suit, he poured out a small sample of the substance into a bottle for the audience to pass around. Then he began reading safety guidelines for handling it. “If you get it on the skin, wash it off,” he said. “If you get it in your mouth, drink plenty of water.”

Then, Thelen opened the jar again, this time dipping his index finger inside. “This is my finger,” he said, putting his finger in his mouth. A sucking sound was heard across the room. He raised his finger up high. “That’s how non-toxic this material is.”

The No Gos were not impressed.

Worked fine for Midgley, after all.

Gotcha. Yeah, I can endorse that viewpoint.

To me, “engineer” implies confidence in the specific result of what you’re making.

So like, you can produce an ambiguous image like The Dress by accident, but that’s not engineering it.

The researchers who made the Socks and Crocs images did engineer them.

Privacy doesn’t mean that nobody can tell what you’re thinking. It means that you will always be more justified in believing yourself to be conscious than in believing others are conscious. There will always be an asymmetry there.

Replaying neural activity is impressive, but it doesn’t prove the original recorded subject was conscious quite as robustly as my daily subjective experience proves my own consciousness to myself. For example, you could conceivably fabricate an entirely original neural recording of a person who never existed at all.

I added some episodes of Walden Pod to my comment, so check those out if you wanna go deeper, but I’ll still give a tl;dl here.

Privacy of consciousness is simply that there’s a permanent asymmetry of how well you can know your own mind vs. the minds of others, no matter how sophisticated you get with physical tools. You will always have a different level of doubt about the sentience of others, compared to your own sentience.

Phenomenal transparency is the idea that your internal experiences (like what pain feels like) are “transparent”, where transparency means you can fully understand something’s nature through cognition alone and not needing to measure anything in the physical world to complete your understanding. For example, the concept of a triangle or that 2+2=4 are transparent. Water is opaque, because you have to inspect it with material tools to understand the nature of what you’re referring to.

You probably immediately have some questions or objections, and that’s where I’ll encourage you to check out those episodes. There’s a good reason they’re longer than 5 sentences.

If you wanna continue down the rabbit hole, I added some good stuff to my original comment. But if you’re leaning towards epiphenomenalism, might I recommend this one: https://audioboom.com/posts/8389860-71-against-epiphenomenalism

Edit: I thought of another couple of things for this comment.

You mentioned consciousness not being well-defined. It actually is, and the go-to definition is from 1974. Nagel’s “What Is It Like to Be a Bat?”

It’s a pretty easy read, as are all of the essays in his book Mortal Questions, so if you have a mild interest in this stuff you might enjoy that book.

Very Bad Wizards has at least one episode on it, too. (Link tbd)

Speaking of Very Bad Wizards, they have an episode about sex robots (link tbd) where (IIRC) they talk about the moral problems with having a convincing human replica that can’t actually consent, and that doesn’t even require bringing consciousness into the argument.

Could just say:

If you accept either privacy of consciousness or phenomenal transparency then philosophical zombies must be conceivable and therefore physicalism is wrong and you can’t engineer consciousness by mimicking brain states.

Edit:

I guess I should’ve expected this, but I’m glad to see multiple people wanted to dive deep into this!

I don’t have the expertise or time to truly do it justice myself, so if you want to go deep on this topic I’m going to recommend my favorite communicator on non-materialist (but also non-religious) takes on consciousness, Emerson Green:

But I’m trying to give a tl;dl to the first few replies at least.

I’ve been using Orion on iOS for a while. It’s not bad.

Not that ridiculous, really.

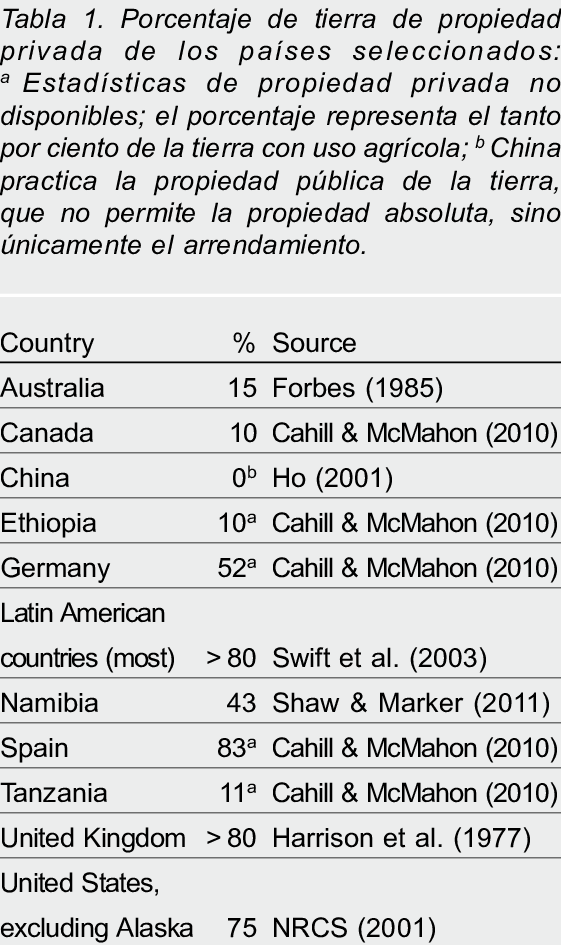

Percentage of land area of select countries that is in private ownership

In these sexual relationships, availability and consent will always be taken for granted, something that’s never taken for granted in a sexual relationship with another human being.

People could get used to interacting in a way in which the other person isn’t taken into account as much, meaning that sexual partners could be instrumentalized for the purpose of having sex. That is to say the ‘human-humanoid’ interaction could be transferred to the relationship between two human beings.

…

Unfortunately, however, these advances aren’t being accompanied by deep reflections about the consequences that sex with robots can have.

On the contrary. I want people to have their own opinions, and to buy the things that suit their tastes even if they seem silly to me.

And I want those things to have fair, consumer-friendly regulations applied to them.

And when companies try to abuse their consumers, and I want us to criticize the company rather than the consumer.

When you blame consumers for allowing antisocial tech into their lives, you’re doing free work for the tech barons.

Pretty nice way to bridge the gap between documentation and automation.

We really are obsessed with replicating any and all sci-fi cautionary tales, aren’t we?

I was thinking about that while watching a true crime doc the other day.

Perp killed his girlfriend, cops had a hard time apprehending the guy, but eventually he killed himself rather than be captured.

Victim’s family was angry cuz they were denied even the last bit of justice they might get. Perp’s family lost a member permanently instead of being able to visit them. Perp never got a chance at rehabilitation. Victim is just as dead as before — maybe even more, cuz one more person who knew them well is dead too.

And I’m like… Who actually wins here???

You lose a loved one, you know who did it, but you can never get to them and ask why, because contact is life-threatening for them. How do you heal?

I appreciate the reply! And I’m sure I’m missing something, but… Why can’t you just lie about the model you used?

Isn’t this still subject to the same problem, where a system can lie about its inference chain by returning a plausible chain which wasn’t the actual chain used for the conclusion? (I’m thinking from the perspective of a consumer sending an API request, not the service provider directly accessing the model.)

Also:

Any time I see a highly technical post talking about AI and/or crypto, I imagine a skilled accountant living in the middle of mob territory. They may not be directly involved in any scams themselves, but they gotta know that their neighbors are crooked and a lot of their customers are gonna use their services in nefarious ways.

Article had a lot of good content on the complexity of defining and evaluating “critical thinking” but only a couple surface-level things about AI.

So, I used to be a huge fan of this podcast, The Pessimists Archive, which catalogued all the times when people freaked out over stuff that seems silly today.

But the thing is: We’ve also failed to freak out sufficiently over some pretty important stuff, and people who were mocked at the time have later been proven to be right.

And then there’s also the paradox of risk management: Taking a risk seriously and working to mitigate it often makes the risk not materialize, making it look like the risk mitigation was a wasted effort.

All that is to say: You really should take each case on its own merits.

Should be using Australium